And it's not because Kafka is broken.

It's because you're ignoring some patterns that make it easier.

So if your Kafka setup feels like a spaghetti mess of topics, consumer groups, retries, and dead-letter queues… you're not alone.

But this article will break down the real patterns that actually help — the ones you should've known before that one topic exploded with 100 million messages at 2AM on a Saturday.

Let's go.

1. Topic Per Event Type (Not Per Use Case)

Let's start with the basics.

Bad Pattern:

orders-service-topic

shipping-service-topic

analytics-service-topicEach service having its own topic? Nope. That's not event-driven. That's just RPC with extra steps.

Better Pattern:

order.created

order.shipped

order.cancelledYou want topics to represent facts — not who created them.

That way, multiple services can react to the same event, and you keep your architecture loosely coupled.

2. Consumer Groups = One Logical Work Unit

Consumer groups are simple: one group = one logical business purpose.

But many teams mess this up. They create one consumer group per instance or even worse, reuse one group for different jobs.

Good Pattern:

inventory-updateremail-senderanalytics-processor

Each of these is its own group. They all consume order.created and do their job independently.

This decouples everything. It also scales beautifully — Kafka will load-balance partitions among consumers within the same group.

3. Avoid Chained Events Without State

Say you get an order.created, and you immediately publish an order.verified before even checking anything.

That's dangerous. Don't turn Kafka into a dumb message-passer.

Use pattern: event + state.

Instead of chaining five events together just to simulate a workflow, have the event trigger a service that owns logic and state, and only emit the next event if necessary.

This prevents accidental infinite loops, ghost events, and confusing replay bugs.

4. Use the Outbox Pattern

Ever tried to produce to Kafka after inserting into a DB, and then your Kafka call fails?

Now your DB is updated… but Kafka never got the message.

Congratulations — you just created an inconsistency.

Outbox Pattern to the rescue.

Store the event in your database as part of the same transaction, like:

BEGIN;

INSERT INTO orders (...) VALUES (...);

INSERT INTO outbox (event_type, payload) VALUES ('order.created', '{...}');

COMMIT;Then run a background job that reads the outbox table and sends the events to Kafka.

Atomic Reliable Replayable

5. Retry with Backoff, Not Blind Replays

People think "Kafka makes everything replayable" so they abuse it. They just reconsume failed events without thinking.

But what if the error was due to a downstream system being down?

You're just hammering it harder.

Use a retry queue with exponential backoff, or use a framework like Kafka Streams / Debezium with error handling built in.

Or manually create topics like:

order.created.retry.1morder.created.retry.10morder.created.dlq

You route failed messages progressively — this gives time for issues to resolve without flooding your system.

6. Schema Registry Isn't Optional

If your events look like this:

{

"id": 123,

"user": "john",

"total": 100

}That's fine… until someone adds a new field:

{

"id": 123,

"user": "john",

"total": 100,

"vip": true

}Now your consumers break. Or worse — silently misbehave.

Use Avro/Protobuf + Schema Registry.

You get:

- Forward/backward compatibility

- Strict typing

- Evolution support

You'll thank yourself when teams grow.

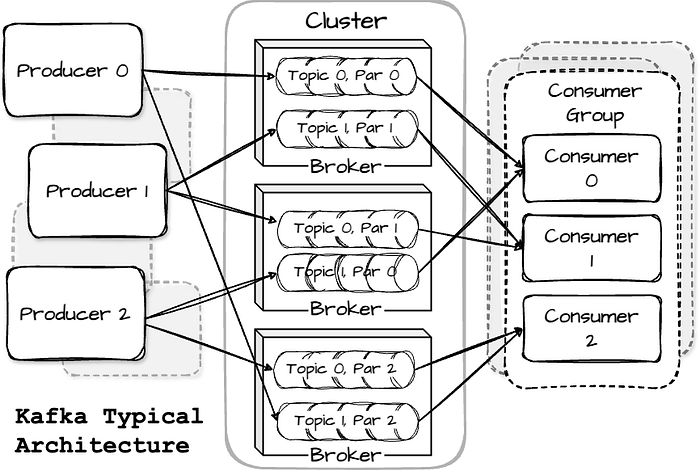

Simple Kafka Architecture Diagram

Here's a basic layout using UML component diagram style:

+------------------+

| Order Service |

|------------------|

| Emits: order.created |

+--------+---------+

|

v

+--------+---------+

| Kafka |

|-------------------|

| Topics: |

| - order.created |

| - order.retry |

| - order.dlq |

+--------+----------+

|

+-----------+------------+

| | |

v v v

+-----------+ +--------------+ +------------------+

|Inventory | |Email Service | |Analytics Service |

|Updater | |(Consumer) | |(Consumer) |

+-----------+ +--------------+ +------------------+Each service is an independent consumer group reacting to the same events.

Outbox pattern (not shown) is implemented in the producer side.

Final Thoughts

Kafka is not hard.

You just made it hard by skipping the patterns that make it manageable.

Treat topics as facts, keep consumer groups single-purpose, use the outbox pattern, retry wisely, and don't skip schemas.

The sooner you follow these, the sooner Kafka becomes the backbone of a robust, scalable, event-driven system — not a 3AM debugging nightmare.

If this feels like a wake-up call — good.

Because no one likes spending weekends debugging a consumer group mismatch because someone decided to "just JSON a thing real quick".

Start using the patterns. Kafka will suddenly make sense.